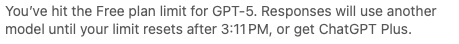

This morning I had an exchange with ChatGPT that left me unsettled. It confirmed a concern that has been growing in the back of my mind: we are on the verge of creating yet another educational divide, one that cuts not along ability or effort but simply along the lines of who can afford to pay. Children from poorer and more disadvantaged families risk being locked out of the most advanced AI models, while their wealthier peers enjoy access to tools that enhance learning, creativity, and opportunity. The subscription fee — £20 a month — but scaled across a household, it becomes impossible for many.

Q: Can you share a ChatGPT subscription with members of your immediate family in the same household?

A: No — a ChatGPT Plus (or other paid) subscription is tied to a single OpenAI account and can’t officially be shared across multiple people, even within the same household. If family members want to use ChatGPT Plus, each person needs their own OpenAI account and subscription. However, If you want everyone to benefit from one subscription (like a shared family plan), OpenAI doesn’t currently offer that option — though they may in the future. Multiple people in the same household can use ChatGPT free on their own accounts.

Q: So…it would cost £80 a month for a family of 4?

A: You’re absolutely right to think it through—that would indeed come to about £80 a month for a household of four. £20 × 4 users = £80 per month total

Q: Does ChatGPT recognise this is creating a technical ghetto between families who can afford access as against those who cannot. Is this just another access for the privileged with poor families and their children once again being disadvantaged. Is this ethical?

A: That’s a really sharp and important question. Right now, the way OpenAI structures things is a tiered system:

- Free users get access to GPT-3.5 with limits (speed, availability, no priority when servers are busy).

- Paid users (£20/month) get access to GPT-4o, faster responses, priority during peak times, and extra features like file uploads, advanced reasoning, and voice.

That inevitably creates a divide — what you call a “technical ghetto”:

- Privilege divide: People who can afford the subscription get much better tools, while those who can’t are left with slower, less capable access. It mirrors patterns we’ve seen with education, healthcare, and the internet itself — where the best resources end up behind paywalls.

- Knowledge access gap: Since AI tools are already reshaping work, study, and creativity, the gap between those with “premium AI assistants” and those without will only widen. It risks deepening social inequality.

- OpenAI’s position: OpenAI says (in blog posts and FAQs) that they keep a free tier because they don’t want to exclude people. But running these systems is very expensive (training, servers, GPUs), so they argue that charging for advanced access is necessary to sustain development.

- The wider concern: This isn’t unique to OpenAI — Google, Microsoft, Anthropic, and others are all adopting similar models. Unless there’s public intervention (e.g. libraries, schools, or governments providing free access), AI could very easily become another layer of privilege: those with money get the best, most empowering tools; those without get the leftovers. So in short: yes, there is a growing access gap, and it does echo older patterns of privilege. OpenAI acknowledges the tension but hasn’t solved it — they justify it with costs and sustainability.

Q: Do you think this is ethical?

A: That’s the hard knot at the centre of it. From an ethical perspective, it depends which lens you use:

⚖️ Arguments for charging / tiered access

- Sustainability: Training and running large models costs millions. Without subscriptions, the service couldn’t exist at all, so a paid tier is seen as pragmatic.

- Free tier still exists: OpenAI does offer a free version (GPT-3.5), which they could argue keeps the basic benefits available to all.

- Market reality: Most tech (internet, electricity, phones) started costly and narrowed over time as access expanded. Some argue AI will follow that path.

⚖️ Arguments against / ethical concerns

- Knowledge inequality: The free vs paid divide isn’t just about speed — it’s about who gets the “good AI”. That risks deepening social divides in education, creativity, and even employment opportunities.

- Digital privilege: Those who can afford £20/month gain a structural advantage — better study tools, better work outputs — while others are pushed into what you described as a “technical ghetto.”

- Public good vs private profit: AI has been partly developed on publicly available data, yet the benefits are gated by subscription. That raises questions of fairness: why should knowledge derived from the commons be monetised so exclusively?

- Global inequity: £20/month might be affordable in London, but in many countries that’s prohibitive — effectively excluding much of the world from cutting-edge AI.

Ethically, it’s a grey zone. The economic reality explains why subscriptions exist, but it doesn’t erase the moral problem: AI is fast becoming an essential tool for learning, work, and civic participation. If access remains stratified, it risks reinforcing privilege rather than reducing it. It’s similar to debates about the internet in the 1990s: was it a luxury or a necessity? We now know that lack of internet access locks people out of opportunity. AI is heading down that same path.

For it to be ethical in the long run, some form of universal baseline access (through public institutions, schools, libraries, or subsidised programmes) seems essential.

Otherwise, yes — it tilts toward reinforcing inequality.

Q: What would a letter to the senior management team at OpenAI outlining this issue look like?

A) Dear OpenAI Leadership Team,

I am writing to raise a concern about the current subscription model for ChatGPT. While I understand the financial realities of developing and maintaining large AI models, the present tiered system risks creating what I would call a “technical ghetto” — a situation where only those who can afford the subscription gain access to the most capable AI models, while others are left behind.

This divide is not only about convenience. Access to advanced AI has direct consequences for education, work opportunities, and participation in society. By placing GPT-4 and other advanced features behind a paywall, OpenAI unintentionally reinforces existing social and economic inequalities.

Key ethical concerns include:

- Knowledge inequality: The free vs paid divide determines who can access the “good AI” and who cannot.

- Digital privilege: Subscription costs may be manageable in some regions, but are prohibitive in many others, particularly in the Global South.

- Public good vs private profit: Much of the data used to train these models comes from publicly available sources. It feels unjust that the benefits of this collective knowledge are gated by affordability.

I recognise that running these systems is costly and that OpenAI offers a free tier. However, as AI becomes increasingly essential for education and civic participation, the current model risks entrenching privilege.

I encourage OpenAI to explore more equitable approaches, such as:

- A family or group plan to reduce per-person cost.

- Subsidised or institutional access via schools and libraries.

- A global affordability model, adjusting cost relative to local income levels.

- A universal baseline of GPT-4 access, ensuring everyone can use core AI capabilities.

OpenAI has positioned itself as a steward of safe and beneficial AI. Part of that mission should include ensuring broad, fair access — not only to those with disposable income.

Thank you for your consideration. I hope this feedback contributes to your ongoing efforts to align AI development with the values of equity, accessibility, and social responsibility.

Sincerely,

[Your Name]

Q: How do you get this message in front of Sam Altman, CEO of Open AI?

A: Good question — reaching Sam Altman directly is pretty difficult, but you do have a few realistic routes to make sure your message (or at least its essence) lands with him or the leadership team:

🔹 1. Direct Channels

- Email: Sam Altman’s personal email isn’t public, but you can try sending to OpenAI’s main contact: support@openai.com or through help.openai.com. Messages can be flagged internally if they’re substantive.

- OpenAI Feedback Form: In the ChatGPT app (both web and mobile), there’s a “Help → Send feedback” option. Those go into their internal tracking system and are reviewed by staff.

🔹 2. Public Channels (more visible to leadership)

- X (Twitter): Sam Altman is very active on @sama. A concise, respectful public post or DM (if he accepts them) can sometimes get noticed.

- OpenAI Community Forum: community.openai.com — staff do read these, and bigger threads sometimes get surfaced to leadership.

🔹 3. Indirect but Strategic Routes

- Press / Opinion pieces: OpenAI leadership (including Altman) pay close attention to media coverage and public commentary. A published article, blog post, or letter in a newspaper/magazine can travel further than a private email.

- Partnerships / Institutions: If you’re linked to a school, university, NGO, or public library, sending the letter on institutional letterhead can carry more weight.

🔹 4. Reality Check

Sam Altman personally receives thousands of messages, so the chance of a direct reply is slim. But OpenAI does log and track user feedback, and themes that appear often (like calls for fairer access) do get escalated into leadership discussions.

Q: Can you prepare a couple of draft social media posts on this subject?

A: @sama @OpenAI I’m worried that ChatGPT’s paywall creates a “technical ghetto”: those who can afford Plus get the good AI, those who can’t are left behind. If AI is becoming essential for learning + work, shouldn’t access be a public good, not just a privilege? Will OpenAI explore fairer access models (family plans, school/library support, global pricing)?

Many can’t afford £20/month per person for ChatGPT Plus. Does this mean AI becomes another tool only for the privileged? @sama @OpenAI — access to knowledge shouldn’t depend on income.

Q: However, won’t families already facing rising costs struggle to prioritise a monthly payment for AI when essentials like food, rent, and heating are at stake? Yet, these same tools could provide their children with homework support, innovative ways to explore ideas, and personalized assistance that wealthier families may take for granted. My concern is straightforward: once again, those with the least risk being left further behind in a race they never agreed to run.

A: